A Convolutional Neural Network (CNN) is a class of deep neural networks.

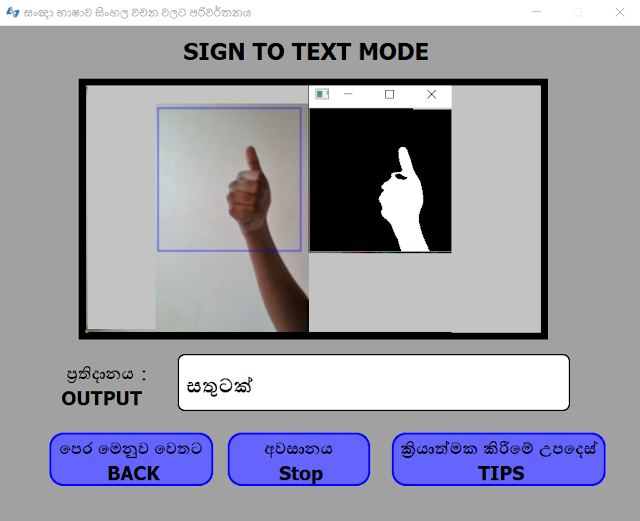

In our project, we have used a CNN for classifying the gestures.The image that shown above is the summary of the model.There are 5 classes of Sinhala gestures which we have used ("ආයුබෝවන් ","ඔබ ","හමුවීම","'සතුටක් ","මම ඔබට ආදරෙයි" ) for classification.There are 3000 images for each sign including the black images with some background noises to train the model ,and the total of images are 18000.

As a input to the CNN , each image consists 64*64 of width and height.And we have used 3 convolution layer which are in 32 layer size.After a convolution layer, it is common to apply a pooling layer. This is important because pooling reduces the dimensionality of feature maps, which subsequently reduces the network training time. These new images can contain negative values. In order to avoid this issue, a rectified linear unit (ReLU) is used to replace negative values by zero. The outputs of this layer are called feature maps.And most importantly, model separated 0.3 percent images as validation data.

CNNs are one of the preferred techniques for deep learning. Its architecture allows automatic extraction of diverse image features, like edges, circles, lines, and texture. The extracted features are increasingly optimized in further layers.